Week 1: Discrete Math Review

Chris Tralie

Debrief

The purpose of today's class was to warm us up on some discrete math. We remembered a proof is a thing we do when we're too tired to do any more examples and we want to convince ourselves that every example of a particular proposition (proposed statement about a truth) is in fact true from now on.

We spent most of class today talking about proofs by induction. We also briefly mentioned the pigeonhole principle. These are tools we'll need from time to time in the course. I'll rehash the examples we did below and provide some other ones, including a brief section on truth tables since we didn't get to that. For more reading beyond these examples, please refer to Sipser Ch. 0 and PIFLAS21 Ch. 2.3

Proofs by Induction

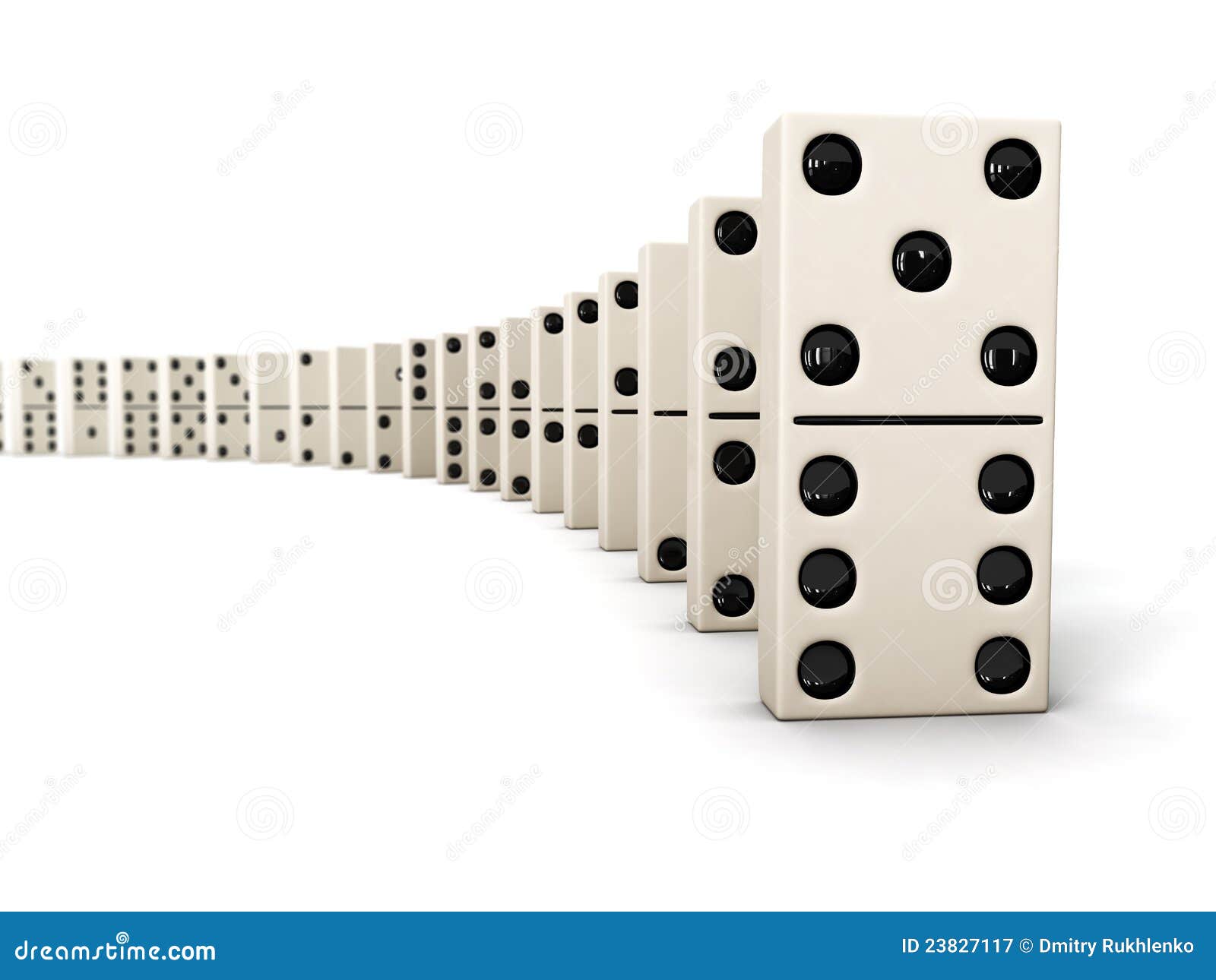

Proofs by induction are usually applied to propositions over natural numbers, each of which can be though of a different domino in line of infinite dominoes. A proof by induction is like all of infinite dominoes falling. We start off the first domino, and then all we need to show is that we can get from one domino to the next, and the whole thing goes crashing down forever. There are three parts that we'd like to see in a formal proof by induction:

- The base case. This is analogous to the first domino

- The inductive hypothesis. This is the assumption that a particular domino right before we are has fallen down

- The inductive step. This is how we use the inductive hypothesis to go from one domino to the next.

This is easiest to see through some examples, so let's review them

Example 1: Arithmetic Series ➕

Prove that for all N >= 1

\[ 1 + 2 + ... + N = \frac{N(N+1)}{2} \]

Let's get together all of the ingredients we need

Base case:

We should start at N = 1 and plug that in. It is indeed true that\[ 1 = 1(2)/2 \]

So we're finished with this part

Inductive hypothesis:

We simply assume the proposition is true for some arbitrary N

\[ 1 + 2 + ... + N = \frac{N(N+1)}{2} \]

Inductive step:

Let's see if we can use some facts from algebra to show that if our inductive hypothesis is taken to be true for N, that we can prove our proposition for N+1. First, let's just go ahead and plug N+1 into the formula

\[ (1 + 2 + ... + N) + (N+1) = \frac{(N+1)(N+2)}{2} \]

Our job will be to show that this equality actually holds. We can substitute our inductive hypothesis in for the first group of terms in the first set of parentheses

\[ \frac{N(N+1)}{2} + (N+1) = \frac{(N+1)(N+2)}{2} \]

We're getting very close now. Let's come up with a common denominator for the left side

\[ \frac{N(N+1) + 2N + 2}{2} + (N+1) = \frac{(N+1)(N+2)}{2} \]

And finally, we'll distribute, foil, and combine like terms on both sides to see that the equation does indeed hold.

\[ \frac{N^2 + 3N + 2}{2} = \frac{N^2 + 3N + 2}{2} \]

We carried through all of the steps, and so this concludes the proof!

Example 2: Making Change 🪙

Let's say we want to make change with 2 cent and 5 cent coins. We want to prove that it's possible to do this for all amounts of change that are N >= 4 cents. As people noticed in class, there is an easier way to prove that this is possible by simply showing how to do it, or making a proof by construction. But for the sake of reviewing induction, let's follow that recipe first.

Inductive step:

Here our base case actually starts at N = 4. We know it's possible to make 4 cents with 2x2cent coins, so we're finished this part

Inductive hypothesis:

The inductive hypothesis is that it's possible to make change with 2cent and 5cent coins for some amount of change N. Formally, this translates into saying that

\[ N = 2a + 5b \]

for some nonnegative integers a and b. We don't have any control over what these integers are, just that they exist

Inductive Step:

Since we can't really make any assumptions about what a and b are, let's break them down into a couple of cases and see if it's possible to swap coins around to get from N cents to N+1 cents

Case 1: Assume we have at least one 5c coin (b > 0). Then what we can do is take away this coin and add back 3x2cent coins. Formally, we'll update a and b as follows

\[ b \gets b - 1 \]

(this is allowed since b > 0 in this case)\[ a \gets a + 3 \]

Case 2: The only other possibility is that we don't have any 5c coins. This means that N = 2b. Since N >= 4, we know that a >= 2. So it's valid to take away 2x2cent coins (4 cents) and add back 5 cents, which brings us to N+1. Formally,

\[ a \gets a - 2 \]

(this works since a >= 2 in this case)\[ b \gets b + 1 \]

This concludes the proof by induction

Proof by construction:

It's actually much easier to prove this is possible by simply showing how to do it. Many (most?) of the proofs you will do in this class will be more direct and intuitive than induction, so we like to do things that way if we can (though induction is nice to fall back on sometimes when we're stumped). To prove this statement by construction, we have two cases

Case 1: N is evenIf N >= 4 is even, then it's the case that N = 2a for some nonnegative integer a >= 2. In other words, simply use N/2 2cent coins

Case 2: N is oddIf N > 4 is odd, then it's the case that N = 5 + 2a for some a >= 0. Or, in other words, start with a 5c coin, and use (N-5)/2 2cent coins to make change for what remains.

Example 3: Power Set Cardinality 💪

In this example, we'll talk about set notation and the power set. Take a moment to review set notation at this link or in Sipser 0.2 if you're rusty.

Given a set S, the power set P(S) corresponding to S is a set whose elements are all possible subsets of S, including the emptyset ∅. That is a mouthful, so let's look at a few examples. We'll keep it simple by making the set elements be letters

1. The power set P({a}) simply has the following 2 subsets:

\[ \{ \emptyset, \{ a \} \} \]

3. The power set P({a, b}) has the following 4 subsets:

\[ \{ \emptyset, \{ a \}, \{ b \}, \{ a, b \} \} \]

3. The power set P({a, b, c}) has the following 8 subsets:

\[ \{ \emptyset, \{ a \}, \{ b \}, \{ c \}, \{ a, b \}, \{ a, c \}, \{ b, c \}, \{ a, b, c \} \} \]

4. The power set P({a, b, c, d}) has the following 16 subsets:

\[ \{ \emptyset, \{ a \}, \{ b \}, \{ c \}, \{ d \}, \{ a, b \}, \{ a, c \}, \{ a, d \}, \{ b, c \}, \{ b, d \}, \{ c, d \}, \{ a, b, c \}, \{ a, b, d \}, \{ a, c, d \}, \{ b, c, d \} , \{ a, b, c, d \} \} \]

By now, you may be starting to notice a pattern: only powers of two are showing up. In particular, the cardinality (number of elements) in the power set is 2 raised to the cardinality of the original set.

Proposition:

\[ |\mathcal{P}(S)| = 2^{|S|} \]

Let's prove this by induction

Proposition: Base case

We'll start with the set S with cardinality 1. The proposition says that |P(S)| should be 21 = 2. This is indeed true, since the only two possible subsets are the empty set ∅ and the single element in S

Inductive hypothesis:

A set with N elements has a power set with 2N elements

Inductive Step:

Let's use the inductive hypothesis to prove that a set S with N+1 elements has a power set with 2N+1 elements. Let's rip a particular element a out of the set S to create a set S-. With formal notation, we define

\[ S^- = S - a \]

\[ |\mathcal{P}(S^-)| = 2^{N} \]

Then, we note that since S- is a subset of S (formally, S- ⊆ S), it follows that all of the elements of the power set of S belong to all of the elements of the power set of S-. Formally,

\[ \mathcal{P}(S^-) \subseteq \mathcal{P} \]

So there are at least 2N elements in P(S). However, none of the elements in P(S-) include a, so we're failing to count a bunch of elements. We'll add each new element by taking an element from P(S-) and appending a to it. For instance, if we had the element {d, f, k} in P(S-), we'd create a new element {d, f, k, a}. We can do this to 2N such elements in P(S-). So including the original elements and the new elements, we see that we have

\[ |\mathcal{P}(S)| = 2^N + 2^N = 2^{N+1} \]

Which completes the proof

Proof by construction:

There is a nice alternative proof by construction using binary counting which gives a lot more intuition about why this proposition is true. Given a set S, we can associate a binary number with |S| digits to each subset of S. Each digit represents a unique element in S, and we'll make it a 1 if that element is in a particular subset or 0 if it's not there.

As an example, let's consider S = {a, b, c}. We'll let a be the 4s place, b be the 2s place, and c be the 1s place. Then we have the following correspondences of binary numbers

| Subset | abc binary number |

| ∅ | 000 |

| {a} | 100 |

| {b} | 010 |

| {c} | 001 |

| {a, b} | 110 |

| {a, c} | 101 |

| {b, c} | 011 |

| {a, b, c} | 111 |

In general, there are 2N unique numbers with N binary digits, and since each of these numbers is in 1 to 1 correspondence with subsets of S by the construction above, this completes the proof.

The Pigeonhole Principle

https://upload.wikimedia.org/wikipedia/commons/5/5c/TooManyPigeons.jpg

Here's a proof technique that doesn't come up often and which might seem obvious, but which can be applied to very non-obvious scenarios to help. We'll use it in a very specific place at the end of this unit

Applying the pigeonhole principle always amounts to figuring out what the pigeons are and what the holes are in the analogy, and then showing that the inequality K < N holds

Example 1: Birthdays 🎂

Proposition: If there are 400 people in a room, then at least two of these people have the same birthday

Proof: By the pigeonhole principle, let's make the pigeons be the people, so N = 400. Then, the holes are the days of the year, of which there are 365. Since K = 365 < N, then we can conclude by the pigeonhole principle that at least two people have the same birthday

Example 2: Fire Alarm Sock Rush 🧦 🧑🏿🚒

My freshman fall of college I was a light sleeper, so I slept with earplugs. As a result, the fire alarm went off at 3AM one day and I didn't wake up to it. When I finally did wake up, I wanted to make sure I looked as classy as possible stepping outside to the lobby, so I decided to reach into my sock drawer next to me to grab some socks. To make sure I was indeed classy, I wanted to make sure the socks were the same color, but it was dark and I couldn't see, so I had to reach in and grab some individual socks blindly. My wardrobe was simple, so I only had black socks and white socks. Prove that I only needed to grab 3 socks to make sure I could find two socks of the same color among them

Proof: In this case, the pigeons are the individual socks, and the holes are the 2 possible colors of the socks. I claim that I need 3 socks, so N = 3, and K = 2 < N. Therefore, I can conclude by the pigeonhole principle that I'm good to go.

Example 3: Snake Oil Compression Software 📁💾🐍

If someone presents you with lossless compression software that claims to be able to reduce the file size of any file, you know they're lying.

Proof: This is an interesting example of a combination proof by contradiction with the pigeonhole principle baked into it (Click here to review proofs by contradiction). What we'll do is assume the snake oil salesman is telling the truth, and then we'll show that we reach an absurd conclusion, catching them red handed. So let's assume that it is possible to compress any file losslessly. As an example, let's consider all possible files with B bits, of which there are 2B. Let's be very generous and declare a victory if this software reduces our file size by a single bit to B-1 bits. There are 2B-1 such possible compressed files. In terms of the pigeonhole principle, we're saying that N = 2B files must be mapped to K = 2B-1 < N locations. By the pigeonhole principle, this means that at least one of the compressed files must have come from two or more original files. But this violates the fact that this is a lossless compression algorithm, because we need to be able to reconstruct the file perfectly from its compressed form, but there are compressed files which will be ambiguous since they came from multiple sources, and we don't know which one was the original if we were just handed the compressed form. Therefore, by contradiction, we know that the salesman is lying, and we conclude the proof.

Binary Logic And Truth Tables

Take a moment to review the boolean logic section in Sipser 0.2. A truth table is a way to exhaustively enumerate the result of a boolean expression on all possible values of the variables that make it up. We'll be using boolean expressions a lot more in the last unit of the course, but I want to plant the seed now. Here are the truth tables for AND (^), OR (v), NOT (¬), and XOR (⊕)

|

|

|

|

Let's now look at an expression involving three variables and some of these operators

\[ \neg (x \vee y) \wedge z \]

For these truth tables, it's often helpful to add a column for intermediate computations to help show our work. For this example, I'll add a column for (x v y) and then ¬(x v y) before adding the final result

| x | y | z | x v y | ¬(x v y) | ¬(x v y) ^ z |

| 0 | 0 | 0 | 0 | 1 | 0 |

| 0 | 0 | 1 | 0 | 1 | 1 |

| 0 | 1 | 0 | 1 | 0 | 0 |

| 0 | 1 | 1 | 1 | 0 | 0 |

| 1 | 0 | 0 | 1 | 0 | 0 |

| 1 | 0 | 1 | 1 | 0 | 0 |

| 1 | 1 | 0 | 1 | 0 | 0 |

| 1 | 1 | 1 | 1 | 0 | 0 |

What we find in the end is that there's only one assignment of values to x, y, and z that makes this statement true. As we will discuss in section 4, it is computationally hard to design an algorithm to find such an assignment to certain classes of boolean statements in general.